The following enterprise storage is based on commodity Intel x86 hardware and open source. I have chosen Supermicro for commodity hardware yet similar hardware can be found from Asian ODMs including Huawei, Quanta, Compal Electronics, Inventec and Wistron.

A Linux NFS server is very stable and highly available. Performance with large number of spindles is easy to achieve, for performance details see here. Performance can be further improved with use of flash caching (dm-cache) or tier of SSD based storage.

This storage would work well with vSphere as vSphere already provides significant storage functionality such as snapshots, thin provisioning, storage vMotion and replication.

I prefer Linux due to the open source nature, yet please note that the same hardware could also be used with Windows and this would make a great CIFS fileserver. Furthermore Windows 2012 NTFS provides deduplication.

1. Single Node - Availability 99.5%

- Red Hat 7 / Centos 7 - Simple NFS Server with large number of internal disks

- One node with Dual Xeon E5-2600 V3 CPUs, 32GB+ memory

- LSI 3108 MegaRAID levels 0, 1, 1E, 5, 6, 10 and 50

- Highly available hardware with redundant power supplies, fans, disk

- 2 x 10Gb ethernet for NFS

- dm-cache for flash caching (optional)

- XFS filesystem

- Can be further expanded with up to 7 SAS expansion drawers each with dual expanders: Maximum = 7 x 264TB = 2PB.

- Hardware vendor can offer 24x7 on site support

2. Dual Node for High Availability - Availability 99.99%

Storage Bridge Bay, or SBB, is an open standard for storage disk array and computer manufacturers to create controllers and chassis that use multi-vendor interchangeable components. The SBB working group was formed in 2006 by Dell, EMC, LSI and Intel and the standards are openly published.

This design uses industry standard SBB hardware with open source to provide high availability:

- Red Hat 7 / Centos 7 - Simple NFS Server with large number of disks

- Clustered for High Availability using Red Hat Cluster Suite

- Two nodes, each with Dual Xeon E5-2600 V3 CPUs, 32GB+ memory

- Highly available hardware with redundant power supplies, fans, disk

- LVM based RAID

- 4 x 10Gb ethernet for NFS

- dm-cache for flash caching (optional)

- XFS filesystem

- Can be further expanded with up to 7 SAS expansion drawers each with dual expanders: Maximum = 7 x 264TB = 2PB.

- Remote replication can be achieved with rsync, vSphere Replication, Zerto

- Hardware vendor can offer 24x7 on site support

High availability is provided with RHCS clustering. The primary node has access to shared storage and the IP address(es) to provide access to client via NFS. In case of failure of primary node the secondary node perform the following tasks:

- Take over IP address(es)

- Take over storage

- Continue serving NFS traffic

http://www.supermicro.com/products/nfo/sbb.cfm

Dual node for High Availability - Two separate nodes each node with dual CPU sockets, 10Gb ethernet and total 24 x 2.5" shared drives:

Same dual node server without CPUs and memory:

3. Example of SAS Expansion Drawers

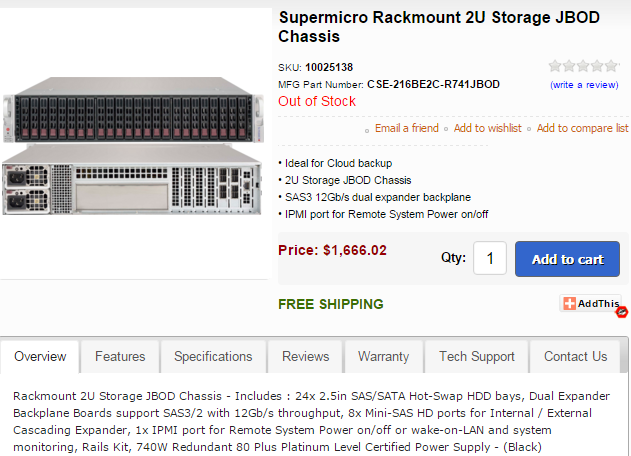

2.5" SAS JBOD expansion - 24 disks:

2.5" SAS JBOD expansion - 72 disks (48 on front, 24 on rear):

2.5" SAS JBOD expansion - 72 disks (48 on front, 24 on rear):

3.5" SAS JBOD expansion - 44 disks (24 on front, 20 on rear):

2.5"/3.5" SAS JBOD expansion - 90 disks:

4. Example HDD/SDD Pricing from Newegg:

2.5" 900GB 10K SAS drives:

2.5" 1.2TB 10K SAS drives:

3.5" 6TB 7.2K SAS drives:

3.5" 6TB 7.2 SATA drives:

2.5" 800GB SSD SATA drives (Endurance 3/10 DWPD):

Update 15/1/2016 - IBM V7000 is also built on Storage Bridge Bay with Linux:

Storage Bay Bridge is commodity standard that is widely supported by Storage industry:

See details how to build 1PB CIFS/NFS server for $64,495 see:

http://blog.zorangagic.com/2016/01/storage-216tb-15292-1pb-64495.htmlOther References:

http://www.thinkmate.com/ (Supermicro reseller)

No comments:

Post a Comment