https://docs.minio.io/

https://en.wikipedia.org/wiki/Minio

Minio is a cloud storage server released under Apache License v2, compatible with Amazon S3 v2/v4 API written in Go. Its was founded by Anand Babu Periasamy who also founded and wrote Gluster.

Minio server can tolerate up to (N/2)-1 node failures in a distributed setup. In addition, you may configure Minio server to continuously mirror data between Minio and any Amazon S3 compatible server.

https://docs.minio.io/docs/minio-quickstart-guide

Docker:

docker pull minio/minio docker run -p 9000:9000 minio/minio server /data

Linux: https://dl.minio.io/server/minio/release/linux-amd64/minio

./minio server /data

Client: https://dl.minio.io/client/mc/release/linux-amd64/mc

Windows: https://dl.minio.io/server/minio/release/windows-amd64/minio.exe

minio.exe server D:\Photos

Client: https://dl.minio.io/client/mc/release/windows-amd64/mc.exe

Minio server config file - config.json:

https://docs.minio.io/docs/minio-server-configuration-guide

Test using browser:

Point your web browser to minio server http://123.123.123.123:9000 ensure your server has started successfully

Test using aws-cli:

- aws configure --profile minio (use keys from minio startup)

- aws configure set default.s3.signature_version s3v4

- aws --endpoint-url http://123.123.123.123:9000 --profile minio s3 ls

- aws --endpoint-url http://123.123.123.123:9000 --profile minio s3 mb s3://mybucket

- aws --endpoint-url http://123.123.123.123:9000 --profile minio s3 cp ztest.jpg s3://mybucket

- aws --endpoint-url http://123.123.123.123:9000 --profile minio s3 ls s3://mybucket

Test using mion client (mc):

- mc.exe --help

- mc config host add minio http://192.168.1.51 BKIKJAA5BMMU2RHO6IBB V7f1CwQqAcwo80UEIJEjc5gVQUSSx5ohQ9GSrr12

- mc config host add s3 https://s3.amazonaws.com BKIKJAA5BMMU2RHO6IBB V7f1CwQqAcwo80UEIJEjc5gVQUSSx5ohQ9GSrr12

- mc ls play

- alias ls='mc ls'

- alias cp='mc cp'

- alias cat='mc cat'

- alias mkdir='mc mb'

- alias pipe='mc pipe'

- alias find='mc find'

- mc mirror localdir/ play/mybucket

- mc mirror -w localdir play/mybucket # Continuously watch for changes on a local directory and mirror the changes

- mc find s3/bucket --name "*.jpg" --watch --exec "mc cp {} play/bucket"

Distributed Minio:

Minio in distributed mode lets you pool multiple drives (even on different machines) into a single object storage server. As drives are distributed across several nodes, distributed Minio can withstand multiple node failures and yet ensure full data protection.

Minio in distributed mode can help you setup a highly-available storage system with a single object storage deployment. With distributed Minio, you can optimally use storage devices, irrespective of their location in a network.

Distributed Minio provides protection against multiple node/drive failures and bit rot using erasure code. As the minimum disks required for distributed Minio is 4 (same as minimum disks required for erasure coding), erasure code automatically kicks in as you launch distributed Minio.

Limits: Minio in distributed mode lets you pool multiple drives (even on different machines) into a single object storage server. As drives are distributed across several nodes, distributed Minio can withstand multiple node failures and yet ensure full data protection.

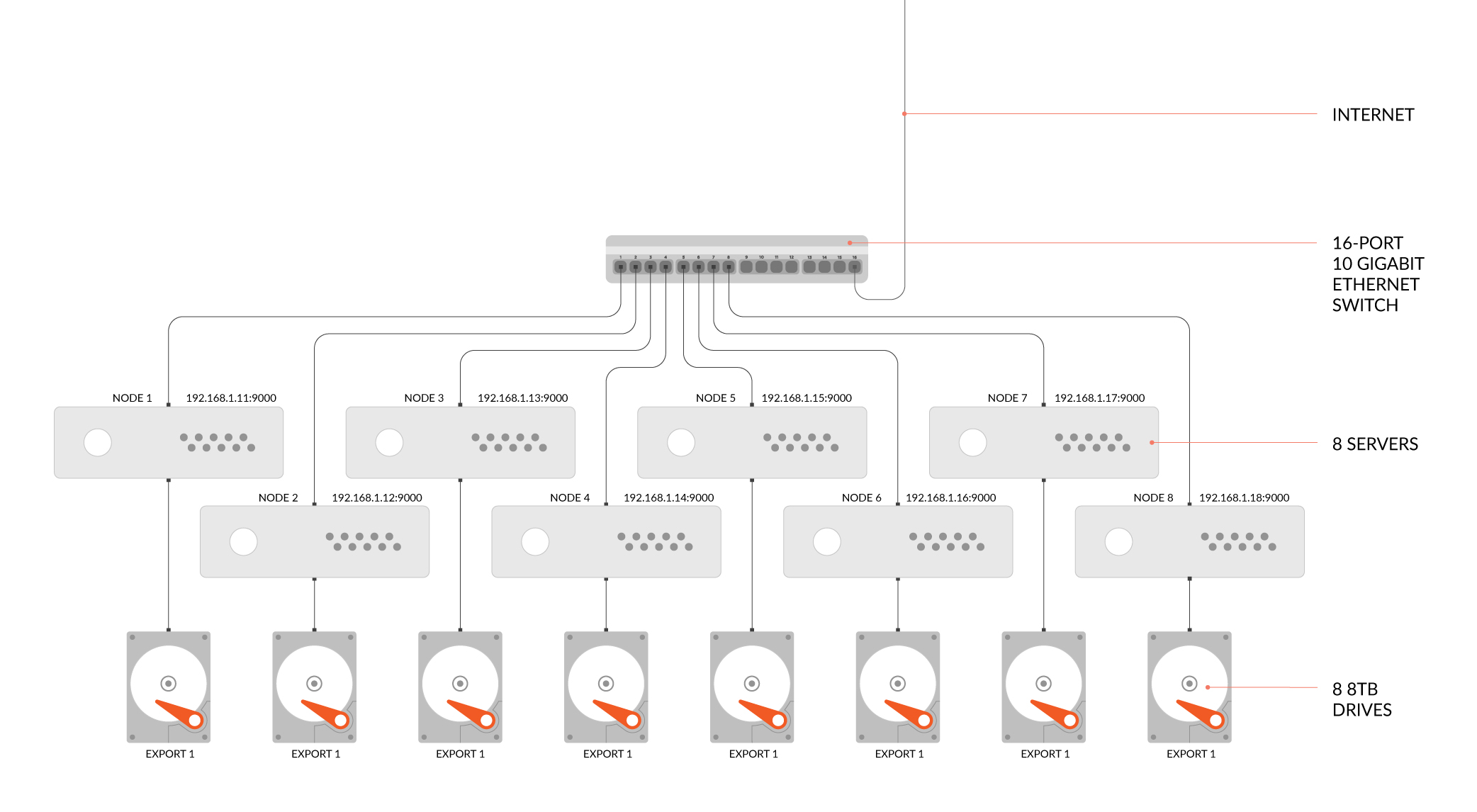

Example 1:

export MINIO_ACCESS_KEY=<ACCESS_KEY>

export MINIO_SECRET_KEY=<SECRET_KEY>

minio server http://192.168.1.11/export1 http://192.168.1.12/export2 \

http://192.168.1.13/export3 http://192.168.1.14/export4 \

http://192.168.1.15/export5 http://192.168.1.16/export6 \

http://192.168.1.17/export7 http://192.168.1.18/export8

export MINIO_SECRET_KEY=<SECRET_KEY>

minio server http://192.168.1.11/export1 http://192.168.1.12/export2 \

http://192.168.1.13/export3 http://192.168.1.14/export4 \

http://192.168.1.15/export5 http://192.168.1.16/export6 \

http://192.168.1.17/export7 http://192.168.1.18/export8

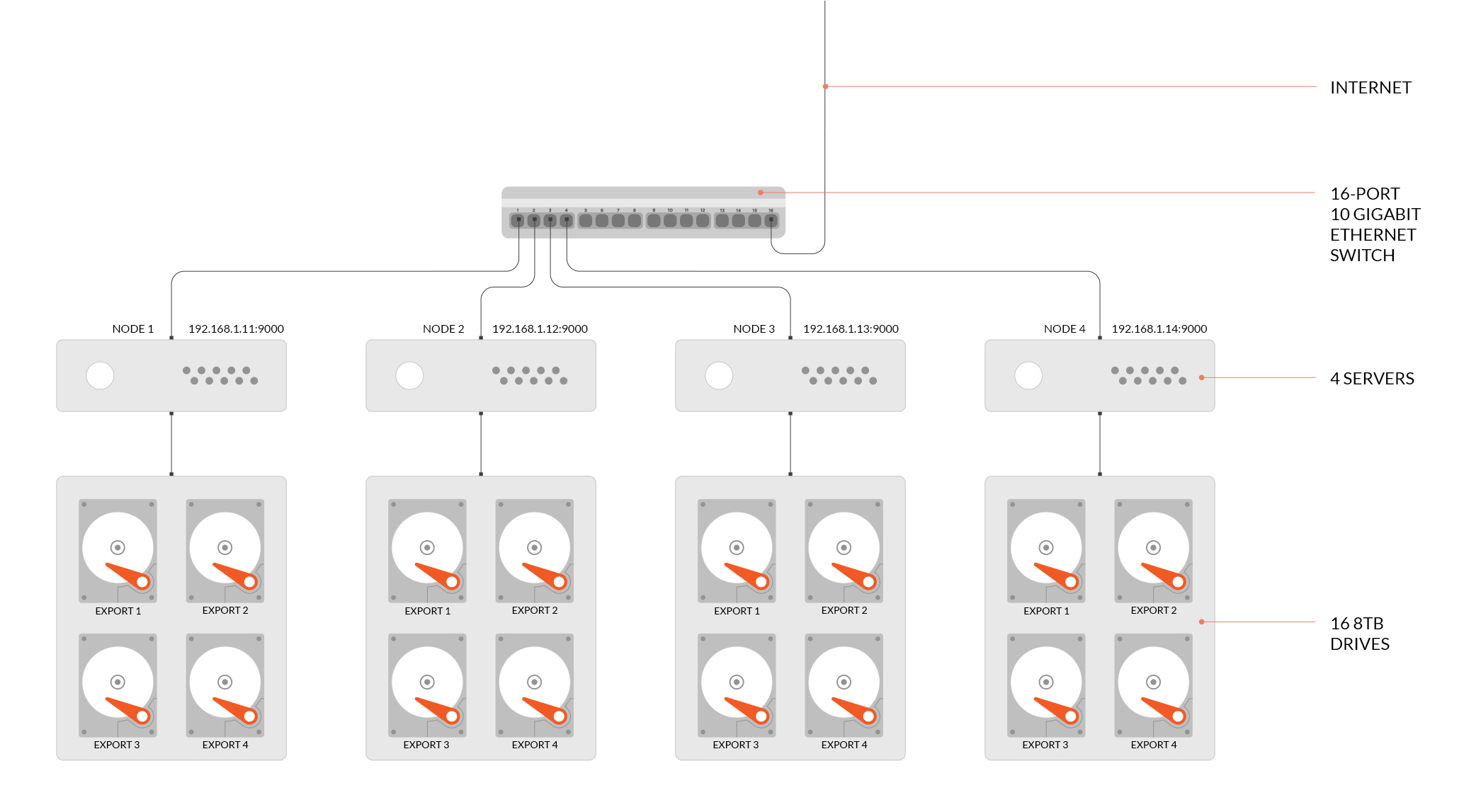

export MINIO_ACCESS_KEY=<ACCESS_KEY>

export MINIO_SECRET_KEY=<SECRET_KEY>

minio server http://192.168.1.11/export1 http://192.168.1.11/export2 \

http://192.168.1.11/export3 http://192.168.1.11/export4 \

http://192.168.1.12/export1 http://192.168.1.12/export2 \

http://192.168.1.12/export3 http://192.168.1.12/export4 \

http://192.168.1.13/export1 http://192.168.1.13/export2 \

http://192.168.1.13/export3 http://192.168.1.13/export4 \

http://192.168.1.14/export1 http://192.168.1.14/export2 \

http://192.168.1.14/export3 http://192.168.1.14/export4

https://cloud.netapp.com/blog/amazon-s3-as-a-file-system

https://linuxroutes.com/mount-s3-bucket-linux-centos-rhel-ubuntu-using-s3fs/

- yum remove fuse fuse-s3fs

- yum install openssl-devel gcc libstdc++-devel gcc-c++ fuse fuse-devel curl-devel libxml2-devel mailcap git automake

- Download and Compile Latest Fuse:

- #cd /usr/src/; wget https://github.com/libfuse/libfuse/releases/download/fuse-3.0.1/fuse-3.0.1.tar.gz

- #tar xzf fuse-3.0.1.tar.gz

- #cd fuse-3.0.1

- #./configure --prefix=/usr/local

- #make && make install

- #export PKG_CONFIG_PATH=/usr/local/lib/pkgconfig

- #ldconfig

- #modprobe fuse

- Download and Compile Latest S3FS:

- #cd /usr/src/

- #wget https://github.com/s3fs-fuse/s3fs-fuse/archive/v1.82.tar.gz

- #tar xzf v1.82.tar.gz

- #cd s3fs-fuse-1.82

- #./autogen.sh

- #./configure --prefix=/usr --with-openssl

- #make

- #make install

- echo AWS_ACCESS_KEY_ID:AWS_SECRET_ACCESS_KEY > ~/.passwd-s3fs; chmod 600 ~/.passwd-s3fs

- mkdir /tmp/cache; mkdir /s3mnt_pt; chmod 777 /tmp/cache /s3mnt_pt; s3fs -o use_cache=/tmp/cache mybucket /s3mnt_pt

No comments:

Post a Comment